Portfolio.WALL-E

| < | LIST |

> |

website;

edwin;

pseudOS;

pendulum;

desktop_companion;

wall-e;

underwater_vision;

jimmy;

genetic_car;

> ./intro

download source code

download final report

view poster

> ./stats

Status: Completed Dec/2014

Languages: Arduino C, Python, C++

Software: ROS, OpenCV, NiTE

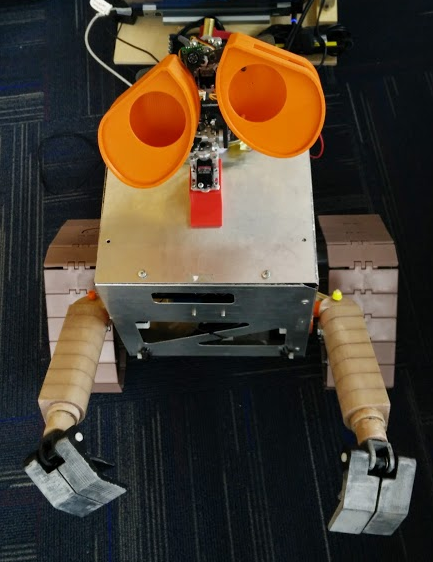

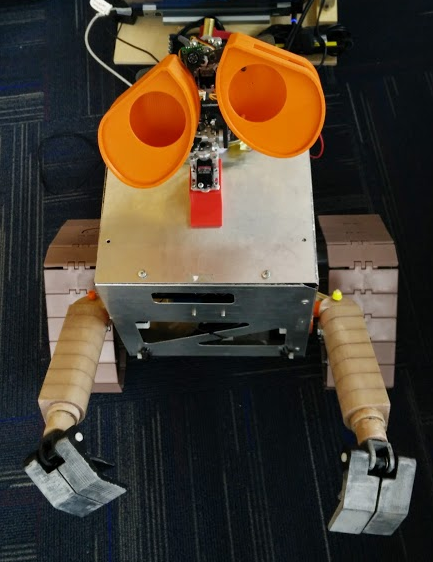

Hardware: Arduino Uno, Kinect, IR sensor, WALL-E Chassis

Documentation: Complete

> ./documentation

WALL-E was a project that I did with a friend of mine as both a personal interest project and as a final deliverable for our Computational Robotics class. My friend, Adela Wee, took care of most of the hardware and wiring harness, while I did most of the coding.

The goal of WALL-E was non-verbal interactions between a human operator and a robot. We do this through gesture recognition using a Microsoft Kinect. Hand detection is done through the NiTE API, and all of the communication between our various scripts is done through ROS.

WALL-E has 9 degrees of freedom, though we ended up with 7 working ones by the time we had to present. Most of the movement happens in his eyes, since humans mostly communicate by looking at each other's facial expressions as well.

We made a really pretty poster for this project!

Here's an additional video showing what his expressions look like:

Also, because WALL-E is adorable, have another picture:

>

|